I’ve been experimenting with local language models for the past few months, but the setup process always felt like more work than it should be. Between managing dependencies, configuring model parameters for my specific hardware, and juggling command-line tools, running AI models locally on my Mac was technically possible but rarely convenient. That changed when I discovered LlamaBarn.

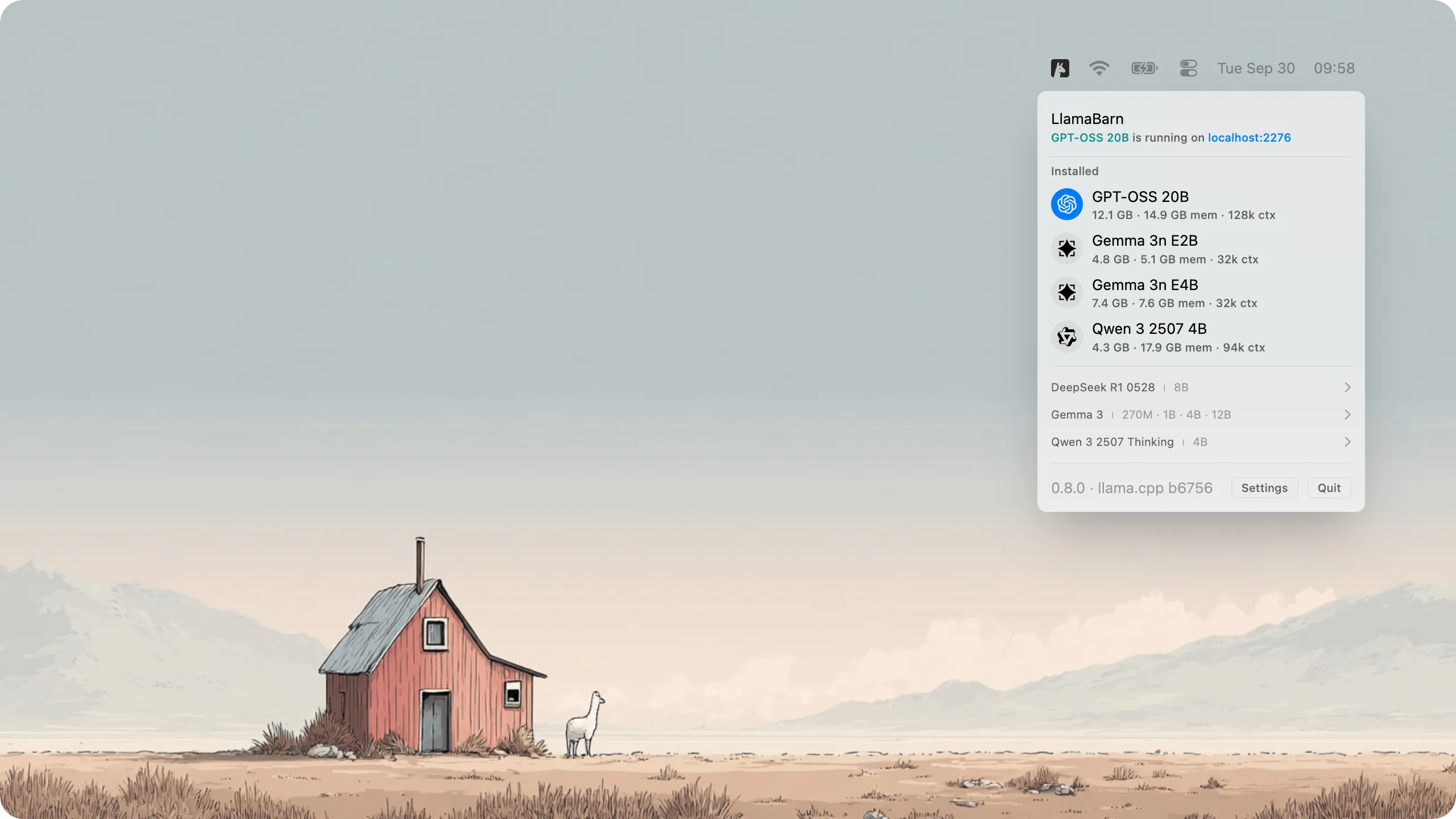

LlamaBarn bills itself as “a cosy home for your LLMs,” and that’s exactly what it delivers. It’s a menu bar app that makes running local language models as simple as clicking an icon. No terminal commands, no configuration files to edit, no guessing which parameters work best with your hardware. You install it via Homebrew (brew install --cask llamabarn), pick a model from the curated catalog, and you’re ready to chat with AI running entirely on your machine.

The app is remarkably lightweight at around 12MB and stores everything cleanly in ~/.llamabarn without modifying your system. What impressed me most is how it automatically optimizes model configurations based on your Mac’s hardware. Whether you’re running an M2 MacBook Air or a Mac Mini M4, LlamaBarn detects your specs and adjusts accordingly. This kind of thoughtful automation removes the guesswork that usually comes with local AI experimentation.

Using LlamaBarn is straightforward. Once a model is loaded, you can interact with it through the built-in web interface or connect via the REST API at http://localhost:2276. The API is OpenAI-compatible, which means tools designed for ChatGPT can work with your local models with minimal modifications. It supports parallel requests and even handles vision models for compatible LLMs. For developers integrating local AI into their workflows, this is a significant time-saver.

The project is open source under an MIT license and actively maintained by the ggml-org team, the same folks behind the llama.cpp infrastructure. The latest release (version 0.11.0) came out in November 2025, and with over 500 stars on GitHub, there’s a healthy community around it. For anyone concerned about privacy or simply curious about running AI without cloud dependencies, LlamaBarn removes most of the friction.

This app sits in that sweet spot between “technically complex” and “actually useful.” It’s not trying to replace cloud AI services entirely, but it makes local models accessible enough that you’ll actually use them. Whether you’re a developer testing AI integrations offline, a privacy-conscious user who wants control over their data, or just someone who enjoys exploring new technology, LlamaBarn is worth installing.